Leveraging Big Data for Scientific Progress

1. Introduction

I’m okay with people tearing apart everything they’ve ever done and replacing it with what is better, what is relevant, what will create the success we need for the next decade.

Introduction

Big data has changed our lives in countless ways. It’s evident every time we stream content customized to our viewing preferences, use a ridesharing service, or check real-time traffic conditions.

The ubiquity of big data makes it all the more surprising that health care has been largely left out of the big data revolution. Yet it’s not as if that data doesn’t exist. Anyone who visits a doctor knows that medical professionals regularly mobilize an astonishingly powerful array of diagnostic tools—from electrocardiograms (ECGs) and X-rays to CT scans, MRI, and other digital imaging—to identify and interpret underlying conditions.

But nearly all of that data and all of those images remain isolated—essentially locked away in the understandable interest of patient privacy and by the siloed nature of a system largely controlled by individual hospitals and health care groups.

The consequences of the disconnect between those who collect medical data and those who use it for their research can be stark. For all the enormous strides that have been made in understanding how the body works—and how it fails—many unsolved mysteries remain, often with grievous consequences. Sudden cardiac arrests, for example, affect more than 350,000 Americans every year, with nearly 90% of them fatal. And why did some people die of COVID-19 while others got a runny nose or no symptoms at all?

Bringing Big Data to Medicine

In 2020, two enterprising scientists came together to address this problem through a simple but powerful concept. A new non-profit digital platform, Nightingale Open Science, would gather a vast trove of data into a single online resource, scrub all personal identifying information, and make it available to qualified researchers around the world at no cost. Crucially, the platform would link medical images with real patient outcomes, rather than doctors’ opinions, enabling the creation of algorithms that learn from real-world experience and providing a bridge between computer science and clinical medicine.

The pair brought an unusual range of skills and experience to the initiative. Sendhil Mullainathan, a computational and behavioral scientist at the University of Chicago, teaches artificial intelligence and utilizes machine learning to tackle complex problems in human behavior, social policy, and medicine—work that has garnered him a coveted MacArthur “Genius” Grant. His partner in the effort, Ziad Obermeyer, has experienced the data disconnect from both sides: as a researcher and distinguished professor in health policy at the University of California, Berkeley, and as an emergency room physician who continues to work at the frontlines of emergency care, including at a hospital on a Navajo reservation in Arizona.

The experience of being a doctor is the experience of being incredibly confused and challenged. Patients are super complicated. And medicine is really complicated. The array of treatments and diagnostics that you can deploy is just immense. One of the things that I realized in my first few years after training was that a bunch of these problems were also the kinds of problems that artificial intelligence is very good at solving.

Advances in machine learning, Obermeyer and Mullainathan realized, could meaningfully assist practicing physicians who, in the real world, are constantly estimating probabilities—like whether an ER patient with mild cardiac issues should be sent home or kept for further monitoring. In the current system, doctors must interpret that data and think probabilistically—something humans notoriously struggle with. Though machine learning offers new ways of “seeing” signals and patterns in the data that humans cannot, doctors have rarely been able to access the kind of big data these algorithms rely on—until now. “In the online world,” Obermeyer points out, “when Netflix is deciding which thing to show you, it sees a bunch of stuff about you and a bunch of stuff about other people and tries to predict the probability that you are going to like a movie. Those are the same kind of problems that are common in medicine—probability estimation problems—and those are the kinds of problems where machine learning really shines.”

Nightingale’s vision—which called for transforming more than a century of medical practice—struck a responsive chord at Griffin Catalyst.

In the project’s bold approach, Griffin Catalyst recognized the same kind of openness to innovation and willingness to transform existing sectors that has long characterized Ken Griffin’s own ventures. Griffin Catalyst committed $1 million to help launch Nightingale Open Science, reinforcing support from Schmidt Futures and the Gordon and Betty Moore Foundation.

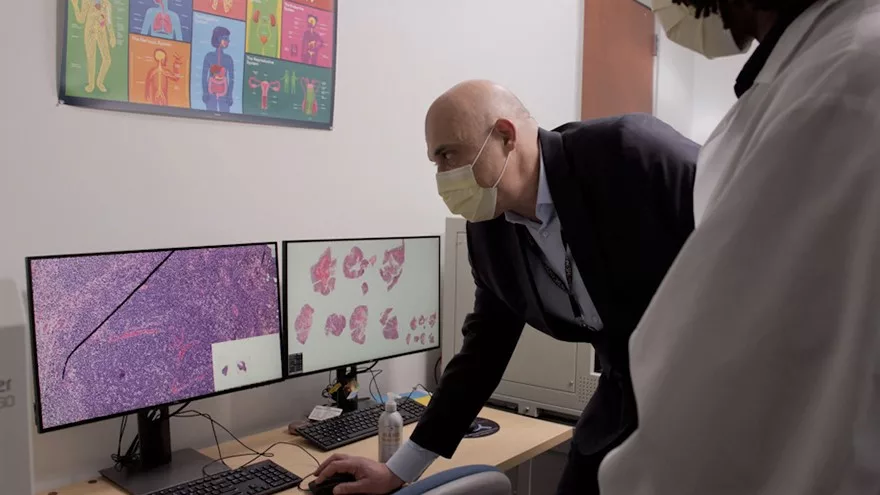

With funding in hand, the platform launched—and soon scored its first major victory. Using large-scale slide scanners to digitize more than a decade’s worth of old biopsy specimens—“literally collecting dust in a basement at [a] health system,” Obermeyer notes—the platform gathered 175,000 digital pathology images from 11,000 patients at risk for breast cancer and linked them to outcomes: which stage of cancer, what kind of metastasis, what level of mortality. With machine learning, researchers were able to identify new “signals” from the images. Beyond the cancerous cells themselves, there was a surprising correlation to markers in stromal cells, the otherwise healthy cells that surround the cancerous area, thus providing doctors with a powerful new direction for research and potentially a new avenue for predicting and treating the deadly disease.

And that’s just the beginning. Nightingale has now turned to “silent” heart attacks, studying 49,000 ECG waveforms and linking them to cardiac ultrasounds to visualize scars in the wall of the heart formed by a prior heart attack—and so helping to identify patients who need drug regimens to prevent future cardiac arrest. Next up: looking at triage protocols for COVID-19, by reviewing 7,000 chest X-rays from coronavirus patients and linking them to data on pulmonary deterioration (represented by the need for a ventilator) and mortality. The results will help doctors make critical triage decisions for COVID-19 patients: whether they are safe to go home or need to be monitored in a hospital.

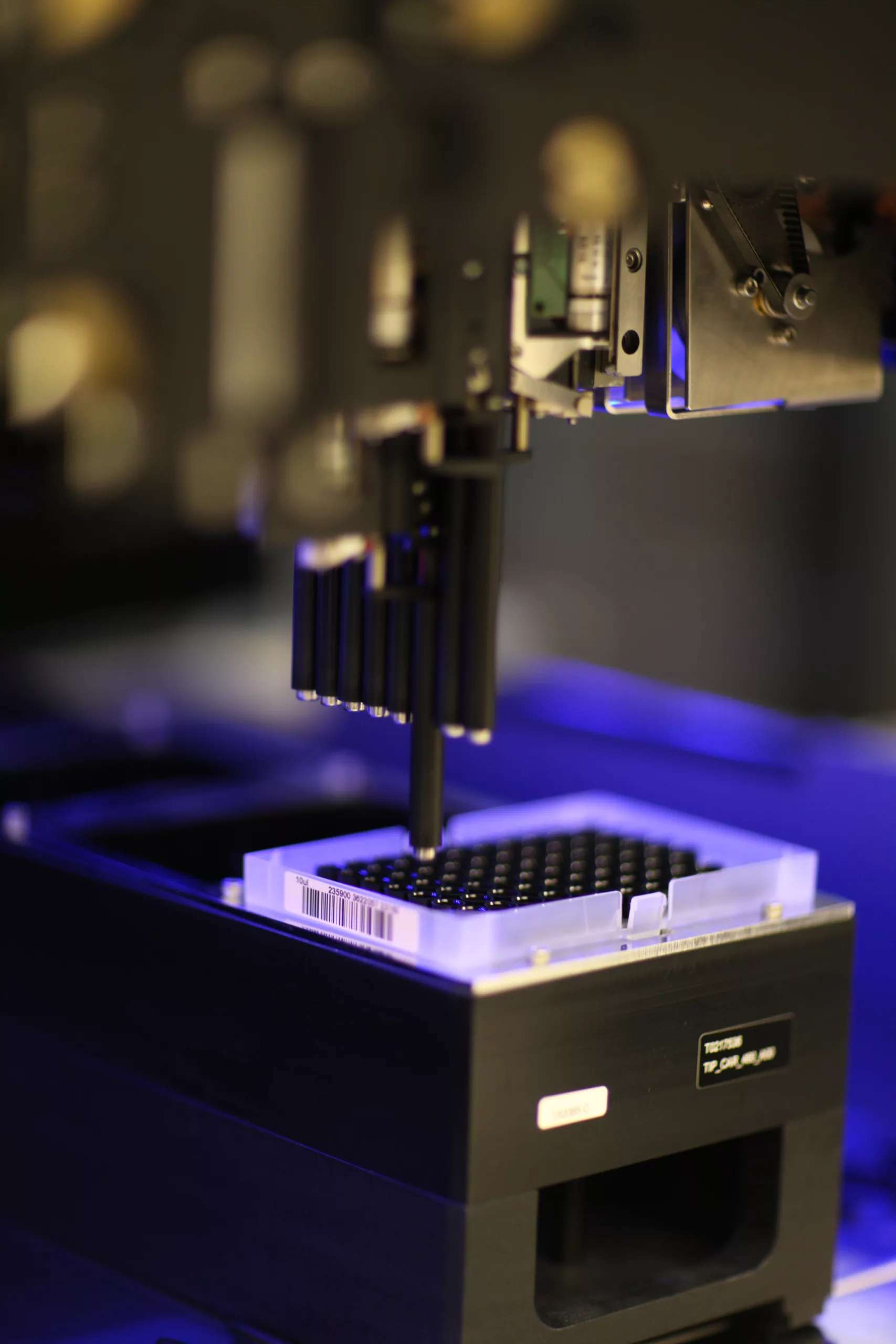

Speeding Scientific Discovery Through Data

Meanwhile, Griffin Catalyst’s commitment leveraging big data and machine learning for science and medicine continues with newer initiatives. That includes a recent $4 million, two-year grant to support the Open Datasets Initiative, an open competition for life-science researchers around the world—especially those focused on protein engineering—to gain access to cloud labs, automate experiments, and gather large datasets. The challenge’s founder, the computational physicist Erika Alden DeBenedictis, observed that it took 50 years of data collection to solve protein structure prediction. Her goal is to use automation to solve the mysteries of protein function in just five years—10x faster than the protein structure breakthrough.

Pandemic Preparedness

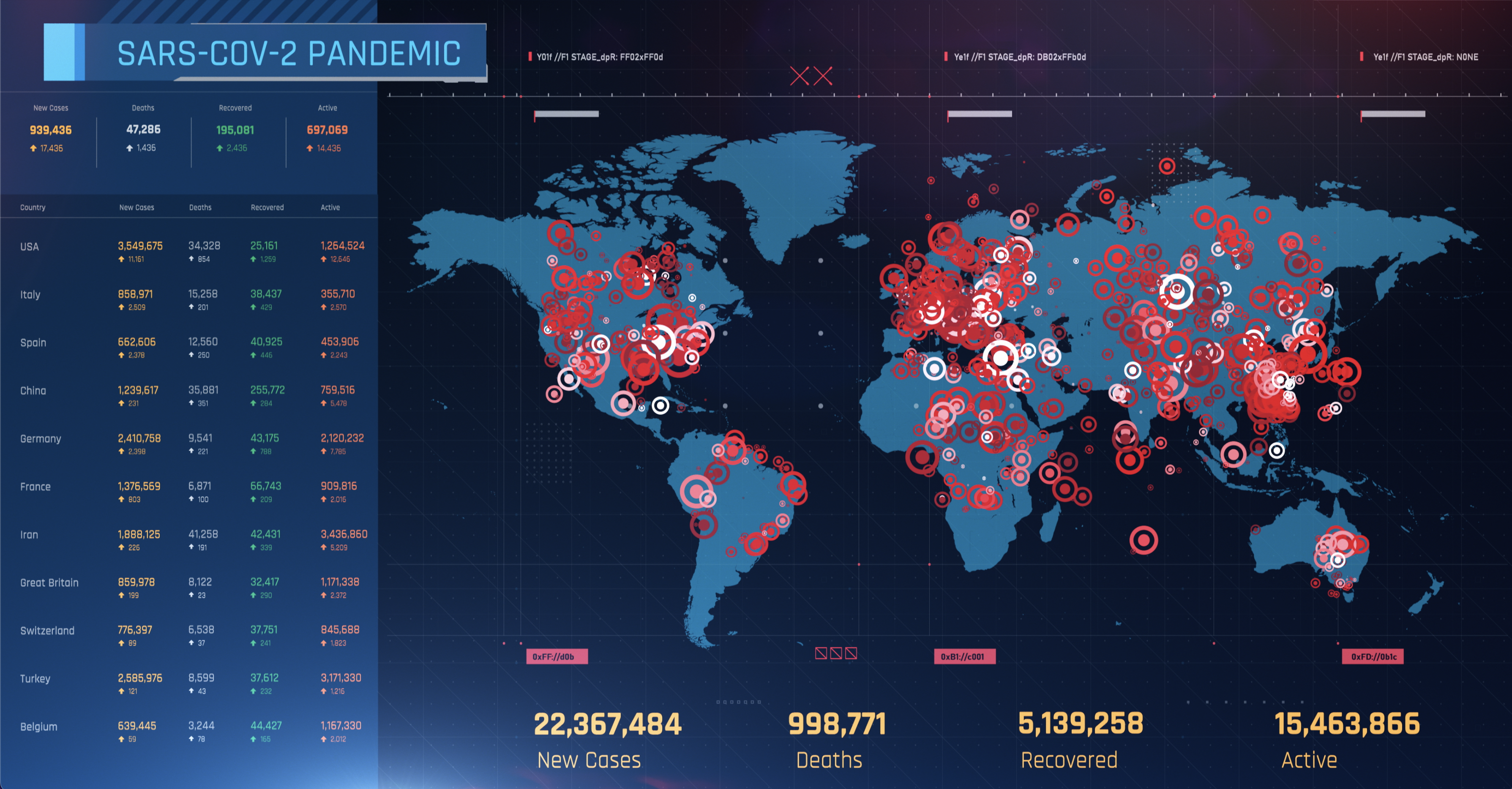

Building on this work to bring the big data revolution to health care, Griffin Catalyst launched the Jameel Institute-Kenneth C. Griffin Initiative for the Economics of Pandemic Preparedness with a $3.2 million gift. The initiative aims to pioneer an integrated approach to economic-epidemiological modeling, bringing together epidemiologists, economists, and data scientists to model preparedness levels and response to disease in new ways. The team plans to produce publicly available scenario-based dashboards modeling preparedness levels of over 150 countries, as well as deep-dive studies on specific preparedness interventions. It will also provide evidence on the impact of alternative policy strategies—to governments, international health organizations, and businesses—and work with partners to create a clear case for investing in pandemic preparedness.

After more than two years of widespread disruption and tragic loss from COVID-19, the researchers leading this new initiative are determined to ensure the world is never again caught by surprise. The innovative and imaginative use of big data will be critical to meeting the challenge.